MSM finally gets that the sun’s magnetic field has flipped | Watts Up With That?:

'via Blog this'

Indonesia harus mampu mengembangkan sains dan teknologi yang ramah lingkungan sesuai dengan perkembangannya di tanah air, tanpa teknologi yang boros sumber alam dan energi.

Hal yang penting juga ialah memahami dan menghayati filsafat sains untuk bisa menyatakan kebenaran ilmiah dan bisa membedakannya dengan "kebenaran" yang diperoleh dengan cara lain.

The Houw Liong

http://LinkedIn.com/in/houwliong

31 December 2013

28 December 2013

27 December 2013

26 December 2013

19 December 2013

13 December 2013

10 December 2013

06 December 2013

Colorectal Cancer Classification using PCA and Fisherface Feature Extraction Data from Pathology Microscopic Image

sainsfilteknologi | Sains, Filsafat dan Teknologi: "Colorectal Cancer Classification using PCA and Fisherface Feature Extraction Data from Pathology Microscopic Image"

'via Blog this'

'via Blog this'

25 November 2013

04 November 2013

28 October 2013

10 October 2013

03 October 2013

14 September 2013

10 September 2013

09 September 2013

23 August 2013

10 August 2013

02 August 2013

29 July 2013

18 July 2013

17 July 2013

IMPLEMENTATION OF ARTIFICIAL INTELLIGENCE ON GAME BOARD GOMOKU BY USING RENJU RULES

Jurnal Telematika Institut Teknologi Harapan Bangsa, Indonesia IMPLEMENTASI ARTIFICIAL INTELLIGENCE PADA GAME BOARD GOMOKU DENGAN MENGGUNAKAN ATURAN RENJU Sherly Gunawan,Prof Dr. The Houw Liong, Elishafina Siswanto Departemen Teknik Informatika Institut Teknologi Harapan Bangsa Jalan Dipati Ukur no. 83-84 Bandung Abstrak - Permainan Gomoku adalah permainan khas Jepang yang dimainkan diatas papan Go oleh dua orang pemain. Untuk membuat permainan menjadi lebih variatif maka ditambahkan sebuah aturan Renju. Gomoku merupakan permainan dengan perfect information yaitu setiap pemain dapat mengetahui persis bagaimana posisi lawan dan pilihan langkah yang tersedia, berbeda halnya dengan permainan kartu, yang hanya dapat mengetahui kartu yang ada di tangan saja. Pembuatan artificial intelligence pada permainan Gomoku ini akan menggunakan metode Negamax dan Hasil dari tugas akhir ini adalah aplikasi permainan Gomoku yang dapat dimainkan player melawan computer, juga computer melawan computer. Berdasarkan hasil pengujian, didapatkan bahwa penggunaan metode Negamax dan dapat memberikan solusi pencarian langkah terbaik, namun kecerdasannya dipengaruhi oleh cara penghitungan point. Kata kunci: Gomoku, intelegensia buatan, Negamax. Abstract - Gomoku game is a typical Japanese game that is played on a Go Board played by two people. To make the game more varied then added a rule Renju. Gomoku is a game with perfect information which is every player can know exactly how is their opponent position and available move options, unlike card games, which each player can only know the card on their hand. Artificial intelligence in this Gomoku game is using building Negamax and αβ-Prunning method. The results of this thesis is the application of Gomoku game that can be played player against computer, also computer against computer. Based on the test results, it was found that the use of methods αβ-Prunning and Negamax can provide the best solution, but it’s intelligence are affected by point calculation. Keywords: Gomoku, artificial intelligence, Negamax.

16 July 2013

13 July 2013

10 July 2013

07 July 2013

04 July 2013

The philosophy of science

Metoda Ilmiah (Sicentific Methods)

===============

The Houw Liong

===============

Abstrak

==============================================================================================================================================

Kebenaran ilmiah berdasarkan data/fakta dan dapat dijelaskan secara logis.

Suatu pernyataan ilmiah selalu diuji degan data/fakta dan diturunkan dengan deduksi dari Hukum Alam yang kebenarannya sudah teruji.

Jadi pada awal harus didahului dengan penemuan Hukum Alam melalui proses :

Pengamatan/Experimen atau Observasi dengan Pengukuran yang cermat lalu dibentuk Hipotesis.

Hipotesis ini akan diuji dengan pengamatan/eksperimen, jika tidak tepat maka hipotesis itu harus diperbaiki. Demikian seterusnya dilakukan ber ulang-ulang sampai didapatkan Hukum Alam yang sudah teruji.

Dalam Fisika Klasik :

Pengamatan dan pengukuran yang dilakukan oleh Galileo menghasilkan persamaan gerak dari berbagai benda dan hasilnya menunjukkan bahwa

percepatannya hanya ditentukan oleh massa dan gaya yang bekerja pada benda itu dan ini berlaku secara universal, baik untuk benda yang berada dekat bumi maupun untuk benda yang jauh dari bumi, misalnya untuk bulan, planet, bintang .

Hasil ini digeneralisasi oleh Newton menjadi hukum Gravitasi F(1,2) = G. m(1).m(2)/r(1,2)^2 dan F=m.a. Hasilnya pasti, tidak ada kehendak bebas.

Fisika Kuantum :

Fisika kuantum menyatakan bahwa segala sesuatu yang terjadi dalam alam semesta hanya bisa ditentukan probabilitasnya saja. Hal ini sesuai dengan konsep nasib. Jika kita menempatkan diri pada tempat dan waktu yang kemungkinan terjadinya besar maka kita dapat memperbaiki nasib kita, ada kehendak bebas.

Selain itu fisika kuantum menyatakan bahwa elektron , proton, foton , dsb mempunyai sifat dualisme yaitu partikel gelombang. Ketika sifat partikel dominan maka sifat gelombangnya tidak tampak, sebaliknya ketika sifat gelombang dominan maka sifat partikelnya tidak tampak. Hal ini dapat dipakai untuk menjelaskan Yesus Kristus yang bisa berperan sebagai manusia dan Tuhan pada saat yang bersamaan.

Ketika Yesus berperan menjadi manusia Yesus harus berdoa kepada Allah Bapak dan melakukan kehendak Bapak Surgawi, namun ketika Yesus adalah Firman yang bersama dengan Allah Bapak dan Roh Kudus berperan aktif dalam penciptaan Alam Semesta.

Fisika Relativitas (Teori Relativitas Einstein):

Teori Relativitas dapat menjelaskan terjadinya Ruang Waktu, sesuai dengan Kitab Kejadian, dimulai dengan Allah berFirman : Jadilah Terang.

Ini berarti pada awal terjadinya Alam Semesta (Big Bang) dimulai dengan Radiasi Cahaya yang energinya sama dengan jumlah energi yang ada dalam alam semesta termasuk materi karena Enegi = Massa X Kecepatan Cahaya kuadrat ( E=m.c^2).

==============================================================================================================================================

Referensi :

===============================================================================================================================================

https://en.wikipedia.org/wiki/Philosophy_of_science

==================================================

The philosophy of science

================================================== 'via Blog this'

================================================== 'via Blog this'

02 July 2013

01 July 2013

16 June 2013

"The Great Attractor" --Is Something is Pulling Our Region of the Universe Towards a Colossal Unseen Mass?

"The Great Attractor" --Is Something is Pulling Our Region of the Universe Towards a Colossal Unseen Mass?:

A busy patch of space has been captured in the image below from the NASA/ESA Hubble Space Telescope. Scattered with many nearby stars, the field also has numerous galaxies in the background. Located on the border of Triangulum Australe (The Southern Triangle) and Norma (The Carpenter’s Square), this field covers part of the Norma Cluster (Abell 3627) as well as a dense area of our own galaxy, the Milky Way.

The Norma Cluster is the closest massive galaxy cluster to the Milky Way, and lies about 220 million light-years away. The enormous mass concentrated here, and the consequent gravitational attraction, mean that this region of space is known to astronomers as the Great Attractor, and it dominates our region of the Universe.

T

he largest galaxy visible in this image is ESO 137-002, a spiral galaxy seen edge on. In this image from Hubble, we see large regions of dust across the galaxy’s bulge. What we do not see here is the tail of glowing X-rays that has been observed extending out of the galaxy — but which is invisible to an optical telescope like Hubble.

Observing the Great Attractor is difficult at optical wavelengths. The plane of the Milky Way — responsible for the numerous bright stars in this image — both outshines (with stars) and obscures (with dust) many of the objects behind it. There are some tricks for seeing through this — infrared or radio observations, for instance — but the region behind the center of the Milky Way, where the dust is thickest, remains an almost complete mystery to astronomers.

Recent evidence from the European Space Agency's Atacama Desert telescopes in Chile appears to contradict the "great attractor" theory. Astronomers have theorized for years that something unknown appears to be pulling our Milky Way and tens of thousands of other galaxies toward itself at a breakneck 22 million kilometers (14 million miles) per hour. But they couldn’t pinpoint exactly what, or where it is.

A huge volume of space that includes the Milky Way and super-clusters of galaxies is flowing towards a mysterious, gigantic unseen mass named mass astronomers have dubbed "The Great Attractor," some 250 million light years from our Solar System.

The Milky Way and Andromeda galaxies are the dominant structures in a galaxy cluster called the Local Group which is, in turn, an outlying member of the Virgo supercluster. Andromeda--about 2.2 million light-years from the Milky Way--is speeding toward our galaxy at 200,000 miles per hour.

This motion can only be accounted for by gravitational attraction, even though the mass that we can observe is not nearly great enough to exert that kind of pull. The only thing that could explain the movement of Andromeda is the gravitational pull of a lot of unseen mass--perhaps the equivalent of 10 Milky Way-size galaxies--lying between the two galaxies.

Meanwhile, our entire Local Group is hurtling toward the center of the Virgo Cluster (image above) at one million miles per hour.

The Milky Way and its neighboring Andromeda galaxy, along with some 30 smaller ones, form what is known as the Local Group, which lies on the outskirts of a “super cluster”—a grouping of thousands of galaxies—known as Virgo, which is also pulled toward the Great Attractor. Based on the velocities at these scales, the unseen mass inhabiting the voids between the galaxies and clusters of galaxies amounts to perhaps 10 times more than the visible matter.

Even so, adding this invisible material to luminous matter brings the average mass density of the universe still to within only 10-30 percent of the critical density needed to "close" the universe. This phenomena suggests that the universe be "open." Cosmologists continue to debate this question, just as they are also trying to figure out the nature of the missing mass, or "dark matter."

It is believed that this dark matter dictates the structure of the Universe on the grandest of scales. Dark matter gravitationally attracts normal matter, and it is this normal matter that astronomers see forming long thin walls of super-galactic clusters.

Recent measurements with telescopes and space probes of the distribution of mass in M31 -the largest galaxy in the neighborhood of the Milky Way- and other galaxies led to the recognition that galaxies are filled with dark matter and have shown that a mysterious force—a dark energy—fills the vacuum of empty space, accelerating the universe's expansion.

Astronomers now recognize that the eventual fate of the universe is inextricably tied to the presence of dark energy and dark matter.The current standard model for cosmology describes a universe that is 70 percent dark energy, 25 percent dark matter, and only 5 percent normal matter.

We don't know what dark energy is, or why it exists. On the other hand, particle theory tells us that, at the microscopic level, even a perfect vacuum bubbles with quantum particles that are a natural source of dark energy. But a naïve calculation of the dark energy generated from the vacuum yields a value 10120 times larger than the amount we observe. Some unknown physical process is required to eliminate most, but not all, of the vacuum energy, leaving enough left to drive the accelerating expansion of the universe.

A new theory of particle physics is required to explain this physical process.The new "dark attractor" theories skirt the so-called Copernican principle that posits that there is nothing special about us as observers of the universe suggesting that the universe is not homogeneous. These alternative theories explain the observed accelerated expansion of the universe without invoking dark energy, and instead assume we are near the center of a void, beyond which a denser "dark" attractor pulls outwards.

In a paper appearing in Physical Review Letters, Pengjie Zhang at the Shanghai Astronomical Observatory and Albert Stebbins at Fermilab show that a popular void model, and many others aiming to replace dark energy, don’t stand up against telescope observation.

Galaxy surveys show the universe is homogeneous, at least on length scales up to a gigaparsec. Zhang and Stebbins argue that if larger scale inhomogeneities exist, they should be detectable as a temperature shift in the cosmic microwave background—relic photons from about 400,000 years after the big bang—that occurs because of electron-photon (inverse Compton) scattering.

Focusing on the “Hubble bubble” void model, they show that in such a scenario, some regions of the universe would expand faster than others, causing this temperature shift to be greater than what is expected. But telescopes that study the microwave background, such as the Atacama telescope in Chile or the South Pole telescope, don’t see such a large shift.

Though they can’t rule out more subtle violations of the Copernican principle, Zhang and Stebbins’ test reinforces Carl Sagan's dictum that "extraordinary claims require extraordinary evidence."

The Daily Galaxy via PhysRevLett.107.041301 and http://www.nasa.gov/mission_pages/hubble/science/great-attractor.html

Related articles

A busy patch of space has been captured in the image below from the NASA/ESA Hubble Space Telescope. Scattered with many nearby stars, the field also has numerous galaxies in the background. Located on the border of Triangulum Australe (The Southern Triangle) and Norma (The Carpenter’s Square), this field covers part of the Norma Cluster (Abell 3627) as well as a dense area of our own galaxy, the Milky Way.

The Norma Cluster is the closest massive galaxy cluster to the Milky Way, and lies about 220 million light-years away. The enormous mass concentrated here, and the consequent gravitational attraction, mean that this region of space is known to astronomers as the Great Attractor, and it dominates our region of the Universe.

T

he largest galaxy visible in this image is ESO 137-002, a spiral galaxy seen edge on. In this image from Hubble, we see large regions of dust across the galaxy’s bulge. What we do not see here is the tail of glowing X-rays that has been observed extending out of the galaxy — but which is invisible to an optical telescope like Hubble.

Observing the Great Attractor is difficult at optical wavelengths. The plane of the Milky Way — responsible for the numerous bright stars in this image — both outshines (with stars) and obscures (with dust) many of the objects behind it. There are some tricks for seeing through this — infrared or radio observations, for instance — but the region behind the center of the Milky Way, where the dust is thickest, remains an almost complete mystery to astronomers.

Recent evidence from the European Space Agency's Atacama Desert telescopes in Chile appears to contradict the "great attractor" theory. Astronomers have theorized for years that something unknown appears to be pulling our Milky Way and tens of thousands of other galaxies toward itself at a breakneck 22 million kilometers (14 million miles) per hour. But they couldn’t pinpoint exactly what, or where it is.

A huge volume of space that includes the Milky Way and super-clusters of galaxies is flowing towards a mysterious, gigantic unseen mass named mass astronomers have dubbed "The Great Attractor," some 250 million light years from our Solar System.

The Milky Way and Andromeda galaxies are the dominant structures in a galaxy cluster called the Local Group which is, in turn, an outlying member of the Virgo supercluster. Andromeda--about 2.2 million light-years from the Milky Way--is speeding toward our galaxy at 200,000 miles per hour.

This motion can only be accounted for by gravitational attraction, even though the mass that we can observe is not nearly great enough to exert that kind of pull. The only thing that could explain the movement of Andromeda is the gravitational pull of a lot of unseen mass--perhaps the equivalent of 10 Milky Way-size galaxies--lying between the two galaxies.

Meanwhile, our entire Local Group is hurtling toward the center of the Virgo Cluster (image above) at one million miles per hour.

The Milky Way and its neighboring Andromeda galaxy, along with some 30 smaller ones, form what is known as the Local Group, which lies on the outskirts of a “super cluster”—a grouping of thousands of galaxies—known as Virgo, which is also pulled toward the Great Attractor. Based on the velocities at these scales, the unseen mass inhabiting the voids between the galaxies and clusters of galaxies amounts to perhaps 10 times more than the visible matter.

Even so, adding this invisible material to luminous matter brings the average mass density of the universe still to within only 10-30 percent of the critical density needed to "close" the universe. This phenomena suggests that the universe be "open." Cosmologists continue to debate this question, just as they are also trying to figure out the nature of the missing mass, or "dark matter."

It is believed that this dark matter dictates the structure of the Universe on the grandest of scales. Dark matter gravitationally attracts normal matter, and it is this normal matter that astronomers see forming long thin walls of super-galactic clusters.

Recent measurements with telescopes and space probes of the distribution of mass in M31 -the largest galaxy in the neighborhood of the Milky Way- and other galaxies led to the recognition that galaxies are filled with dark matter and have shown that a mysterious force—a dark energy—fills the vacuum of empty space, accelerating the universe's expansion.

Astronomers now recognize that the eventual fate of the universe is inextricably tied to the presence of dark energy and dark matter.The current standard model for cosmology describes a universe that is 70 percent dark energy, 25 percent dark matter, and only 5 percent normal matter.

We don't know what dark energy is, or why it exists. On the other hand, particle theory tells us that, at the microscopic level, even a perfect vacuum bubbles with quantum particles that are a natural source of dark energy. But a naïve calculation of the dark energy generated from the vacuum yields a value 10120 times larger than the amount we observe. Some unknown physical process is required to eliminate most, but not all, of the vacuum energy, leaving enough left to drive the accelerating expansion of the universe.

A new theory of particle physics is required to explain this physical process.The new "dark attractor" theories skirt the so-called Copernican principle that posits that there is nothing special about us as observers of the universe suggesting that the universe is not homogeneous. These alternative theories explain the observed accelerated expansion of the universe without invoking dark energy, and instead assume we are near the center of a void, beyond which a denser "dark" attractor pulls outwards.

In a paper appearing in Physical Review Letters, Pengjie Zhang at the Shanghai Astronomical Observatory and Albert Stebbins at Fermilab show that a popular void model, and many others aiming to replace dark energy, don’t stand up against telescope observation.

Galaxy surveys show the universe is homogeneous, at least on length scales up to a gigaparsec. Zhang and Stebbins argue that if larger scale inhomogeneities exist, they should be detectable as a temperature shift in the cosmic microwave background—relic photons from about 400,000 years after the big bang—that occurs because of electron-photon (inverse Compton) scattering.

Focusing on the “Hubble bubble” void model, they show that in such a scenario, some regions of the universe would expand faster than others, causing this temperature shift to be greater than what is expected. But telescopes that study the microwave background, such as the Atacama telescope in Chile or the South Pole telescope, don’t see such a large shift.

Though they can’t rule out more subtle violations of the Copernican principle, Zhang and Stebbins’ test reinforces Carl Sagan's dictum that "extraordinary claims require extraordinary evidence."

The Daily Galaxy via PhysRevLett.107.041301 and http://www.nasa.gov/mission_pages/hubble/science/great-attractor.html

Related articles

28 May 2013

25 May 2013

Cold Fusion Experiment Maybe Holds Promise … Possibly … Hang on a Sec ….

Cold Fusion Experiment Maybe Holds Promise … Possibly … Hang on a Sec ….:

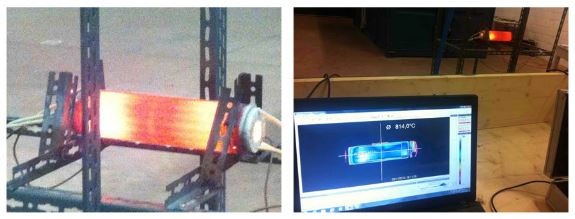

Cold fusion has been called one of the greatest scientific breakthroughs that might likely never happen. On the surface, it seems simple – a room-temperature reaction occurring under normal pressure. But it is a nuclear reaction, and figuring it out and getting it to work has not been simple, and any success in this area could ultimately – and seriously — change the world. Despite various claims of victory over the years since 1920, none have been able to be replicated consistently and reliably.

But there’s buzz this week of a cold fusion experiment that has been replicated, twice. The tests have reportedly produced excess heat with roughly 10,000 times the energy density and 1,000 times the power density of gasoline.

(...)

Read the rest of Cold Fusion Experiment Maybe Holds Promise … Possibly … Hang on a Sec …. (528 words)

© nancy for Universe Today, 2013. |

Permalink |

2 comments |

Post tags: cold fusion, Physics, Science

Feed enhanced by Better Feed from Ozh

Cold fusion has been called one of the greatest scientific breakthroughs that might likely never happen. On the surface, it seems simple – a room-temperature reaction occurring under normal pressure. But it is a nuclear reaction, and figuring it out and getting it to work has not been simple, and any success in this area could ultimately – and seriously — change the world. Despite various claims of victory over the years since 1920, none have been able to be replicated consistently and reliably.

But there’s buzz this week of a cold fusion experiment that has been replicated, twice. The tests have reportedly produced excess heat with roughly 10,000 times the energy density and 1,000 times the power density of gasoline.

(...)

Read the rest of Cold Fusion Experiment Maybe Holds Promise … Possibly … Hang on a Sec …. (528 words)

© nancy for Universe Today, 2013. |

Permalink |

2 comments |

Post tags: cold fusion, Physics, Science

Feed enhanced by Better Feed from Ozh

22 May 2013

18 May 2013

15 May 2013

12 May 2013

10 May 2013

New Discovery Hints at Unknown Fundamental Force in the Universe

New Discovery Hints at Unknown Fundamental Force in the Universe:

What caused the matter/antimatter imbalance is one of physics' great mysteries. It's not predicted by the Standard Model---the overarching theory that describes the laws of nature and the nature of matter. An international team of physicists has now found the first direct evidence of pear shaped nuclei in exotic atoms. The findings could advance the search for a new fundamental force in nature that could explain why the Big Bang created more matter than antimatter---a pivotal imbalance in the history of everything.

"If equal amounts of matter and antimatter were created at the Big Bang, everything would have annihilated, and there would be no galaxies, stars, planets or people," said Tim Chupp, a University of Michigan professor of physics and biomedical engineering and co-author of a paper on the work published in the May 9 issue of Nature.

Antimatter particles have the same mass but opposite charge from their matter counterparts. Antimatter is rare in the known universe, flitting briefly in and out of existence in cosmic rays, solar flares and particle accelerators like CERN's Large Hadron Collider, for example. When they find each other, matter and antimatter particles mutually destruct or annihilate.

The Standard Model describes four fundamental forces or interactions that govern how matter behaves: Gravity attracts massive bodies to one another. The electromagnetic interaction gives rise to forces on electrically charged bodies. And the strong and weak forces operate in the cores of atoms, binding together neutrons and protons or causing those particles to decay.

Physicists have been searching for signs of a new force or interaction that might explain the matter-antimatter discrepancy. The evidence of its existence would be revealed by measuring how the axis of nuclei of the radioactive elements radon and radium line up with the spin.

The researchers confirmed that the cores of these atoms are shaped like pears, rather than the more typical spherical orange or elliptical watermelon profiles. The pear shape makes the effects of the new interaction much stronger and easier to detect.

"The pear shape is special," Chupp said. "It means the neutrons and protons, which compose the nucleus, are in slightly different places along an internal axis."

The pear-shaped nuclei are lopsided because positive protons are pushed away from the center of the nucleus by nuclear forces, which are fundamentally different from spherically symmetric forces like gravity.

"The new interaction, whose effects we are studying does two things," Chupp said. "It produces the matter/antimatter asymmetry in the early universe and it aligns the direction of the spin and the charge axis in these pear-shaped nuclei."

To determine the shape of the nuclei, the researchers produced beams of exotic---short- lived---radium and radon atoms at CERN's Isotope Separator facility ISOLDE. The atom beams were accelerated and smashed into targets of nickel, cadmium and tin, but due to the repulsive force between the positively charged nuclei, nuclear reactions were not possible. Instead, the nuclei were excited to higher energy levels, producing gamma rays that flew out in a specific pattern that revealed the pear shape of the nucleus.

"In the very biggest picture, we're trying to understand everything we've observed directly and also indirectly, and how it is that we happen to be here," Chupp said.

The image below shows the phase transitions of quark-gluon plasma allows us to understand the behavior of matter in the early universe, just fractions of a second after the Big Bang, as well as conditions that might exist inside neutron stars. The fact that these two disparate phenomena are related demonstrates just how deeply the cosmic and quantum worlds are intertwined.

The research was led by University of Liverpool Physics Professor Peter Butler. "Our findings contradict some nuclear theories and will help refine others," he said.

The measurements also will help direct the searches for atomic EDMs (electric dipole moments) currently being carried out in North America and Europe, where new techniques are being developed to exploit the special properties of radon and radium isotopes.

"Our expectation is that the data from our nuclear physics experiments can be combined with the results from atomic trapping experiments measuring EDMs to make the most stringent tests of the Standard Model, the best theory we have for understanding the nature of the building blocks of the universe," Butler said.

Daily Galaxy via University of Michigan

Image credits: Brookhaven National Laboratory and University of Melbourne

Related articles

What caused the matter/antimatter imbalance is one of physics' great mysteries. It's not predicted by the Standard Model---the overarching theory that describes the laws of nature and the nature of matter. An international team of physicists has now found the first direct evidence of pear shaped nuclei in exotic atoms. The findings could advance the search for a new fundamental force in nature that could explain why the Big Bang created more matter than antimatter---a pivotal imbalance in the history of everything.

"If equal amounts of matter and antimatter were created at the Big Bang, everything would have annihilated, and there would be no galaxies, stars, planets or people," said Tim Chupp, a University of Michigan professor of physics and biomedical engineering and co-author of a paper on the work published in the May 9 issue of Nature.

Antimatter particles have the same mass but opposite charge from their matter counterparts. Antimatter is rare in the known universe, flitting briefly in and out of existence in cosmic rays, solar flares and particle accelerators like CERN's Large Hadron Collider, for example. When they find each other, matter and antimatter particles mutually destruct or annihilate.

The Standard Model describes four fundamental forces or interactions that govern how matter behaves: Gravity attracts massive bodies to one another. The electromagnetic interaction gives rise to forces on electrically charged bodies. And the strong and weak forces operate in the cores of atoms, binding together neutrons and protons or causing those particles to decay.

Physicists have been searching for signs of a new force or interaction that might explain the matter-antimatter discrepancy. The evidence of its existence would be revealed by measuring how the axis of nuclei of the radioactive elements radon and radium line up with the spin.

The researchers confirmed that the cores of these atoms are shaped like pears, rather than the more typical spherical orange or elliptical watermelon profiles. The pear shape makes the effects of the new interaction much stronger and easier to detect.

"The pear shape is special," Chupp said. "It means the neutrons and protons, which compose the nucleus, are in slightly different places along an internal axis."

The pear-shaped nuclei are lopsided because positive protons are pushed away from the center of the nucleus by nuclear forces, which are fundamentally different from spherically symmetric forces like gravity.

"The new interaction, whose effects we are studying does two things," Chupp said. "It produces the matter/antimatter asymmetry in the early universe and it aligns the direction of the spin and the charge axis in these pear-shaped nuclei."

To determine the shape of the nuclei, the researchers produced beams of exotic---short- lived---radium and radon atoms at CERN's Isotope Separator facility ISOLDE. The atom beams were accelerated and smashed into targets of nickel, cadmium and tin, but due to the repulsive force between the positively charged nuclei, nuclear reactions were not possible. Instead, the nuclei were excited to higher energy levels, producing gamma rays that flew out in a specific pattern that revealed the pear shape of the nucleus.

"In the very biggest picture, we're trying to understand everything we've observed directly and also indirectly, and how it is that we happen to be here," Chupp said.

The image below shows the phase transitions of quark-gluon plasma allows us to understand the behavior of matter in the early universe, just fractions of a second after the Big Bang, as well as conditions that might exist inside neutron stars. The fact that these two disparate phenomena are related demonstrates just how deeply the cosmic and quantum worlds are intertwined.

The research was led by University of Liverpool Physics Professor Peter Butler. "Our findings contradict some nuclear theories and will help refine others," he said.

The measurements also will help direct the searches for atomic EDMs (electric dipole moments) currently being carried out in North America and Europe, where new techniques are being developed to exploit the special properties of radon and radium isotopes.

"Our expectation is that the data from our nuclear physics experiments can be combined with the results from atomic trapping experiments measuring EDMs to make the most stringent tests of the Standard Model, the best theory we have for understanding the nature of the building blocks of the universe," Butler said.

Daily Galaxy via University of Michigan

Image credits: Brookhaven National Laboratory and University of Melbourne

Related articles

"Project 1640" --Amazing New Space Technologies Analyze the Molecular Chemistry of Alien Planets

"Project 1640" --Amazing New Space Technologies Analyze the Molecular Chemistry of Alien Planets:

Today, there are more than 800 confirmed exoplanets -- planets that orbit stars beyond our sun -- and more than 2,700 other candidates. What are these exotic planets made of? Unfortunately, you cannot stack them in a jar like marbles and take a closer look. Instead, researchers are coming up with advanced techniques for probing the planets' makeup.

One breakthrough to come in recent years is direct imaging of exoplanets. Ground-based telescopes have begun taking infrared pictures of the planets posing near their stars in family portraits. But to astronomers, a picture is worth even more than a thousand words if its light can be broken apart into a rainbow of different wavelengths.

Those wishes are coming true as researchers are beginning to install infrared cameras on ground-based telescopes equipped with spectrographs. Spectrographs are instruments that spread an object's light apart, revealing signatures of molecules. Project 1640, partly funded by NASA's Jet Propulsion Laboratory, Pasadena, Calif., recently accomplished this goal using the Palomar Observatory near San Diego. The Project 1640 image above shows the four exoplanets orbiting HR 8977.

"In just one hour, we were able to get precise composition information about four planets around one overwhelmingly bright star," said Gautam Vasisht of JPL, co-author of the new study appearing in the Astrophysical Journal. "The star is a hundred thousand times as bright as the planets, so we've developed ways to remove that starlight and isolate the extremely faint light of the planets."

Along with ground-based infrared imaging, other strategies for combing through the atmospheres of giant planets are being actively pursued as well. For example, NASA's Spitzer and Hubble space telescopes monitor planets as they cross in front of their stars, and then disappear behind. NASA's upcoming James Webb Space Telescope will use a comparable strategy to study the atmospheres of planets only slightly larger than Earth.

In the new study, the researchers examined HR 8799, a large star orbited by at least four known giant, red planets. Three of the planets were among the first ever directly imaged around a star, thanks to observations from the Gemini and Keck telescopes on Mauna Kea, Hawaii, in 2008. The fourth planet, the closest to the star and the hardest to see, was revealed in images taken by the Keck telescope in 2010.

That alone was a tremendous feat considering that all planet discoveries up until then had been made through indirect means, for example by looking for the wobble of a star induced by the tug of planets.

Those images weren't enough, however, to reveal any information about the planets' chemical composition. That's where spectrographs are needed -- to expose the "fingerprints" of molecules in a planet's atmosphere. Capturing a distant world's spectrum (image below) requires gathering even more planet light, and that means further blocking the glare of the star.

Project 1640 accomplished this with a collection of instruments, which the team installs on the ground-based telescopes each time they go on "observing runs." The instrument suite includes a coronagraph to mask out the starlight; an advanced adaptive optics system, which removes the blur of our moving atmosphere by making millions of tiny adjustments to two deformable telescope mirrors; an imaging spectrograph that records 30 images in a rainbow of infrared colors simultaneously; and a state-of-the-art wave front sensor that further adjusts the mirrors to compensate for scattered starlight.

"It's like taking a single picture of the Empire State Building from an airplane that reveals a bump on the sidewalk next to it that is as high as an ant," said Ben R. Oppenheimer, lead author of the new study and associate curator and chair of the Astrophysics Department at the American Museum of Natural History, N.Y., N.Y.

Their results revealed that all four planets, though nearly the same in temperature, have different compositions. Some, unexpectedly, do not have methane in them, and there may be hints of ammonia or other compounds that would also be surprising. Further theoretical modeling will help to understand the chemistry of these planets.

Meanwhile, the quest to obtain more and better spectra of exoplanets continues. Other researchers have used the Keck telescope and the Large Binocular Telescope near Tucson, Ariz., to study the emission of individual planets in the HR8799 system.

In addition to the HR 8799 system, only two others have yielded images of exoplanets. The next step is to find more planets ripe for giving up their chemical secrets. Several ground-based telescopes are being prepared for the hunt, including Keck, Gemini, Palomar and Japan's Subaru Telescope on Mauna Kea, Hawaii.

Ideally, the researchers want to find young planets that still have enough heat left over from their formation, and thus more infrared light for the spectrographs to see. They also want to find planets located far from their stars, and out of the blinding starlight. NASA's infrared Spitzer and Wide-field Infrared Survey Explorer (WISE) missions, and its ultraviolet Galaxy Evolution Explorer, now led by the California Institute of Technology, Pasadena, have helped identify candidate young stars that may host planets meeting these criteria.

"We're looking for super-Jupiter planets located faraway from their star," said Vasisht. "As our technique develops, we hope to be able to acquire molecular compositions of smaller, and slightly older, gas planets."

Still lower-mass planets, down to the size of Saturn, will be targets for imaging studies by the James Webb Space Telescope.

"Rocky Earth-like planets are too small and close to their stars for the current technology, or even for James Webb to detect. The feat of cracking the chemical compositions of true Earth analogs will come from a future space mission such as the proposed Terrestrial Planet Finder," said Charles Beichman, a co-author of the P1640 result and executive director of NASA's Exoplanet Science Institute at Caltech.

Though the larger, gas planets are not hospitable to life, the current studies are teaching astronomers how the smaller, rocky ones form.

"The outer giant planets dictate the fate of rocky ones like Earth. Giant planets can migrate in toward a star, and in the process, tug the smaller, rocky planets around or even kick them out of the system. We're looking at hot Jupiters before they migrate in, and hope to understand more about how and when they might influence the destiny of the rocky, inner planets," said Vasisht.

NASA's Exoplanet Science Institute manages time allocation on the Keck telescope for NASA. JPL manages NASA's Exoplanet Exploration program office. Caltech manages JPL for NASA.

A visualization from the American Museum of Natural History showing where the HR 8799 system is in relation to our solar system is online at http://www.youtube.com/watch?v=yDNAk0bwLrU .

Related articles

Today, there are more than 800 confirmed exoplanets -- planets that orbit stars beyond our sun -- and more than 2,700 other candidates. What are these exotic planets made of? Unfortunately, you cannot stack them in a jar like marbles and take a closer look. Instead, researchers are coming up with advanced techniques for probing the planets' makeup.

One breakthrough to come in recent years is direct imaging of exoplanets. Ground-based telescopes have begun taking infrared pictures of the planets posing near their stars in family portraits. But to astronomers, a picture is worth even more than a thousand words if its light can be broken apart into a rainbow of different wavelengths.

Those wishes are coming true as researchers are beginning to install infrared cameras on ground-based telescopes equipped with spectrographs. Spectrographs are instruments that spread an object's light apart, revealing signatures of molecules. Project 1640, partly funded by NASA's Jet Propulsion Laboratory, Pasadena, Calif., recently accomplished this goal using the Palomar Observatory near San Diego. The Project 1640 image above shows the four exoplanets orbiting HR 8977.

"In just one hour, we were able to get precise composition information about four planets around one overwhelmingly bright star," said Gautam Vasisht of JPL, co-author of the new study appearing in the Astrophysical Journal. "The star is a hundred thousand times as bright as the planets, so we've developed ways to remove that starlight and isolate the extremely faint light of the planets."

Along with ground-based infrared imaging, other strategies for combing through the atmospheres of giant planets are being actively pursued as well. For example, NASA's Spitzer and Hubble space telescopes monitor planets as they cross in front of their stars, and then disappear behind. NASA's upcoming James Webb Space Telescope will use a comparable strategy to study the atmospheres of planets only slightly larger than Earth.

In the new study, the researchers examined HR 8799, a large star orbited by at least four known giant, red planets. Three of the planets were among the first ever directly imaged around a star, thanks to observations from the Gemini and Keck telescopes on Mauna Kea, Hawaii, in 2008. The fourth planet, the closest to the star and the hardest to see, was revealed in images taken by the Keck telescope in 2010.

That alone was a tremendous feat considering that all planet discoveries up until then had been made through indirect means, for example by looking for the wobble of a star induced by the tug of planets.

Those images weren't enough, however, to reveal any information about the planets' chemical composition. That's where spectrographs are needed -- to expose the "fingerprints" of molecules in a planet's atmosphere. Capturing a distant world's spectrum (image below) requires gathering even more planet light, and that means further blocking the glare of the star.

Project 1640 accomplished this with a collection of instruments, which the team installs on the ground-based telescopes each time they go on "observing runs." The instrument suite includes a coronagraph to mask out the starlight; an advanced adaptive optics system, which removes the blur of our moving atmosphere by making millions of tiny adjustments to two deformable telescope mirrors; an imaging spectrograph that records 30 images in a rainbow of infrared colors simultaneously; and a state-of-the-art wave front sensor that further adjusts the mirrors to compensate for scattered starlight.

"It's like taking a single picture of the Empire State Building from an airplane that reveals a bump on the sidewalk next to it that is as high as an ant," said Ben R. Oppenheimer, lead author of the new study and associate curator and chair of the Astrophysics Department at the American Museum of Natural History, N.Y., N.Y.

Their results revealed that all four planets, though nearly the same in temperature, have different compositions. Some, unexpectedly, do not have methane in them, and there may be hints of ammonia or other compounds that would also be surprising. Further theoretical modeling will help to understand the chemistry of these planets.

Meanwhile, the quest to obtain more and better spectra of exoplanets continues. Other researchers have used the Keck telescope and the Large Binocular Telescope near Tucson, Ariz., to study the emission of individual planets in the HR8799 system.

In addition to the HR 8799 system, only two others have yielded images of exoplanets. The next step is to find more planets ripe for giving up their chemical secrets. Several ground-based telescopes are being prepared for the hunt, including Keck, Gemini, Palomar and Japan's Subaru Telescope on Mauna Kea, Hawaii.

Ideally, the researchers want to find young planets that still have enough heat left over from their formation, and thus more infrared light for the spectrographs to see. They also want to find planets located far from their stars, and out of the blinding starlight. NASA's infrared Spitzer and Wide-field Infrared Survey Explorer (WISE) missions, and its ultraviolet Galaxy Evolution Explorer, now led by the California Institute of Technology, Pasadena, have helped identify candidate young stars that may host planets meeting these criteria.

"We're looking for super-Jupiter planets located faraway from their star," said Vasisht. "As our technique develops, we hope to be able to acquire molecular compositions of smaller, and slightly older, gas planets."

Still lower-mass planets, down to the size of Saturn, will be targets for imaging studies by the James Webb Space Telescope.

"Rocky Earth-like planets are too small and close to their stars for the current technology, or even for James Webb to detect. The feat of cracking the chemical compositions of true Earth analogs will come from a future space mission such as the proposed Terrestrial Planet Finder," said Charles Beichman, a co-author of the P1640 result and executive director of NASA's Exoplanet Science Institute at Caltech.

Though the larger, gas planets are not hospitable to life, the current studies are teaching astronomers how the smaller, rocky ones form.

"The outer giant planets dictate the fate of rocky ones like Earth. Giant planets can migrate in toward a star, and in the process, tug the smaller, rocky planets around or even kick them out of the system. We're looking at hot Jupiters before they migrate in, and hope to understand more about how and when they might influence the destiny of the rocky, inner planets," said Vasisht.

NASA's Exoplanet Science Institute manages time allocation on the Keck telescope for NASA. JPL manages NASA's Exoplanet Exploration program office. Caltech manages JPL for NASA.

A visualization from the American Museum of Natural History showing where the HR 8799 system is in relation to our solar system is online at http://www.youtube.com/watch?v=yDNAk0bwLrU .

Related articles

28 April 2013

24 April 2013

Solar Powered Plane Soars Over the Golden Gate Bridge

Solar Powered Plane Soars Over the Golden Gate Bridge:

The Solar Impulse airplane flies over the Golden Gate Bridge on April 23, 2013. Credit: Solar Impulse.The world’s first solar-powered plane is stretching its wings over the US. Today it took off from Moffett Field in Mountain View, California — the home of NASA’s Ames Research Center – and flew to San Fransisco, soaring over the Golden Gate Bridge.

Starting on May 1, Solar Impulse will fly across the US to New York, making several stops along the way as a kind of “get to know you” tour for the US while the founders of Solar Impulse, Swiss pilot Bertrand Piccard and and pilot Andre Borschberg, want to spread their message of sustainability and technology. You can read about the cross-country tour here on UT and also on the Solar Impulse website. You can follow Solar Impulse’s Twitter feed for the latest news of where they are.

© nancy for Universe Today, 2013. |

Permalink |

One comment |

Post tags: Solar Impulse, solar power, Technology

Feed enhanced by Better Feed from Ozh

The Solar Impulse airplane flies over the Golden Gate Bridge on April 23, 2013. Credit: Solar Impulse.

Starting on May 1, Solar Impulse will fly across the US to New York, making several stops along the way as a kind of “get to know you” tour for the US while the founders of Solar Impulse, Swiss pilot Bertrand Piccard and and pilot Andre Borschberg, want to spread their message of sustainability and technology. You can read about the cross-country tour here on UT and also on the Solar Impulse website. You can follow Solar Impulse’s Twitter feed for the latest news of where they are.

© nancy for Universe Today, 2013. |

Permalink |

One comment |

Post tags: Solar Impulse, solar power, Technology

Feed enhanced by Better Feed from Ozh

06 April 2013

Dyson: Climatologists are no Einsteins

Dyson: Climatologists are no Einsteins:  The New Jersey Star Ledger printed a nice interview with Freeman Dyson:

The New Jersey Star Ledger printed a nice interview with Freeman Dyson:

At any rate, he is saying some things that should be important for everyone, especially every layman, who wants to understand the climate debate. The climatologists don't really understand the climate; they just blindly follow computer models that are full of ad hoc fudge factors to account for clouds and other aspects.

Freeman Dyson also mentioned that increased CO2 is probably making the environment better and he estimated that about 15% of the crop yields are due to the extra CO2 added by the human activity. I agree with this estimate wholeheartedly. The CO2 is elevated by a factor of K = 396/280 = 1.41 and my approximate rule is that the crop yields scale like the square root of K which is currently between 1.15 and 1.20.

Dyson – and the sensible journalist – also mention that the journalists are doing a lousy job, probably because they're lazy and just love to copy things from others. The hatred against the "dissenters" is similar to what it used to be in the Soviet Union; I must confirm that.

The article also contains some older videos in which Dyson was interviewed.

This 13-year-old Gentleman, Alex, who is doing speeches has understood many things about the climate panic that many adults have not. He got an iPad as a reward for that. ;-)

But let me return to the "no Einsteins" claim. It's a very important one because the climate scientists were sometimes painted nearly as the ultimate superior discipline above all of sciences. In reality, the climate scientists are – and even before the climate hysteria took off were – the worst among the worst physical scientists. I am sure that everyone who has studied at a physics department with many possible subfields must know that. My conclusions primarily come from Prague but I feel more or less certain that they hold almost everywhere.

When you're a college freshman in a similar maths-and-physics department, you start to be exposed to various types of scientific results very soon. Some people find the maths too complicated and they give up. Some students survive. Those who survive pretty quickly find out whether they're better in the theory or the experimental approach to physics. Clearly, if they're into experiments, they will drift towards atomic physics, optics, vacuum physics, and perhaps condensed matter physics, aside from several disciplines.

If they feel comfortable in advanced theory, they will lean towards theoretical physics, nuclear physics, and partly theoretical condensed matter physics. But all the statements so far were "politically correct" – implicitly assuming that everyone is equally good and he only has different interests. But that ain't the case.

Some people aren't too good at anything. In Prague, it's been a pretty much official wisdom that those folks are most likely to pick atmospheric physics or geophysics and – if they care about stars – astrophysics. In some sense, these fields are conceptually stuck in the 19th century or perhaps 18th century. They're simple enough. The laymen's common sense is often enough to do them. Quantum mechanics may be viewed as the most characteristic litmus test. If a student finds it impenetrable, he or she must give up ideas about going to particle physics but also condensed matter physics, optics, and some other characteristic "20th century" disciplines.

The people who are sort of good at maths but they really find out they are slower with quantum mechanics tend to go to (general) relativistic physics which is a powerful field in Prague. To some extent, the detachment from quantum mechanics is correlated with the detachment from statistical methods in physics and from experimenter's thinking. So among the smart enough folks, the relativists who have avoided quantum mechanics from their early years are probably the "least experimentally oriented" ones.

But meteorology is the ultimate refugee camp. A stereotypical idea of the job waiting for the meteorology alumni are the "tree frogs" [a Czech synonym for "weather girls"] who forecast the weather on TV screens. Their makeup is usually more important than their knowledge and understanding and yes, meteorology also has the highest percentage of female students (not counting teaching of maths, physics, and IT) which, whether you like it or not, is also correlated with the significantly lower mathematical IQ in that subfield.

I am totally confident that every sufficiently large and representative university with a physics department could reconstruct a correlation between e.g. the grades and the specialization that the students choose that would confirm most of the general patterns above. But at many places, these things are just a taboo. This is extremely unfortunate because the public should know where the smartest people may be looked for – and atmospheric physics or climatology certainly can't be listed in the answer to this question. It's important because when such things are taboo, certain people may play games and pretend that they're something that they're not.

Dyson also touched another interesting topic, the difference between "understanding" and "sitting in front of a computer model that is assumed to understand". I have discussed this difference many times. But let me repeat that they're totally different things. While I would agree it's a waste of time – and a silly sport – to force students to do mechanical operations that may be done by a computer or learn lots of simple rules that may be summarized in a book (because the students are downgraded to a dull memory chip or a simple CPU chip), it's necessary for a student to go through all the key steps and methods at least once so that he or she knows how they work inside. Or at least they may feel confident that if they wanted to improve their understanding what's going on, they could penetrate into all the details within hours of extra study or earlier.

The climate forecasts have so large error margins that it makes no sense to pretend that you need to make a high-precision calculation. But if a high-precision calculation isn't needed, an approximate calculation is enough. And for an approximate calculation, you shouldn't need a terribly complicated algorithm that runs for a very long time. In fact, you should be able to construct a simplified picture and a calculation that may be reconstructed pretty much without a computer. In particular, if you can't derive the order-of-magnitude estimate for a quantity describing the behavior of a physical system, then you just don't understand the behavior! A computer may spit out a magic answer but you are not the computer. If you don't know what the computer is exactly doing, assuming etc., then you can't independently "endorse" the results by the computer, you can't know what they depend upon and how robust the actual outcome of the program is.

Mechanical arithmetic calculations may be boring and of course that it's not what mathematicians and physicists are supposed to be best at or do most of their time (a physicist or a mathematician is something else than an idiot savant, a simple fact that most of my close relatives are completely unaware of). On the other hand, much of the logic behind sciences and behind individual arguments in science is about solid technical thinking that may be sped up with the help of a computer but this thinking is still completely essential because science and mathematics are ultimately composed of these things! If you don't know them, you don't know science and mathematics.

An extra topic in the context of atmospheric physics is the difference between the knowledge and "character of knowledge" of meteorologists and climatologists. I would say that meteorologists are in a much better shape when it comes to their "mental training" because they deal with lots of real-world data that affect their thinking. They have some experience. In comparison, climatologists work with a much smaller amount of data – they talk about 30-year averages but our record isn't too much longer than 30 years so there are just "several numbers". The predictions only face the real-world data after many decades when the climatologist is already retired or dead and this confrontation between real-world data and scientists' opinions is what drives the "natural selection" of ideas in empirically based disciplines – and atmospheric physics disciplines are surely textbook examples of them.

The most extreme form of the intellectual degradation is the idea that the only important quantity to be predicted is the rate of [global] temperature increase (or, almost equivalently in the climate believers' opinion, the climate sensitivity). If someone believes it's the only number to be derived and one can fudge hundreds of parameters to do so, it's too bad! Clearly, such a predictive framework is totally worthless. A predictive framework must be able to predict a larger number of numbers than the number of parameters that have to be inserted. Needless to say, my description of the situation of the "hardcore alarmed climatology" was way too kind because they don't even verify the single only "calculated" result – the climate sensitivity. When it differs by a factor of 3 from the observations, they just don't care. So they don't predict anything. They have absolutely no data – no "threats" for their pet ideas – that could direct their construction and improvement of the theories.

The New Jersey Star Ledger printed a nice interview with Freeman Dyson:

The New Jersey Star Ledger printed a nice interview with Freeman Dyson:Climatologists are no Einsteins, says his successor (Star Ledger)They mention that when Einstein was still around, Dyson was hired by the Institute for Advanced Studies in Princeton after the search for the planet's most brilliant physicist. He has done quite some work to justify these labels although I wouldn't say that it has put him at the #1 spot. He's still one of the giants of the old times who keep on walking on the globe.

Beginning in the GWPF (Benny Peiser et al.)

At any rate, he is saying some things that should be important for everyone, especially every layman, who wants to understand the climate debate. The climatologists don't really understand the climate; they just blindly follow computer models that are full of ad hoc fudge factors to account for clouds and other aspects.

Freeman Dyson also mentioned that increased CO2 is probably making the environment better and he estimated that about 15% of the crop yields are due to the extra CO2 added by the human activity. I agree with this estimate wholeheartedly. The CO2 is elevated by a factor of K = 396/280 = 1.41 and my approximate rule is that the crop yields scale like the square root of K which is currently between 1.15 and 1.20.

Dyson – and the sensible journalist – also mention that the journalists are doing a lousy job, probably because they're lazy and just love to copy things from others. The hatred against the "dissenters" is similar to what it used to be in the Soviet Union; I must confirm that.

The article also contains some older videos in which Dyson was interviewed.

This 13-year-old Gentleman, Alex, who is doing speeches has understood many things about the climate panic that many adults have not. He got an iPad as a reward for that. ;-)

But let me return to the "no Einsteins" claim. It's a very important one because the climate scientists were sometimes painted nearly as the ultimate superior discipline above all of sciences. In reality, the climate scientists are – and even before the climate hysteria took off were – the worst among the worst physical scientists. I am sure that everyone who has studied at a physics department with many possible subfields must know that. My conclusions primarily come from Prague but I feel more or less certain that they hold almost everywhere.

When you're a college freshman in a similar maths-and-physics department, you start to be exposed to various types of scientific results very soon. Some people find the maths too complicated and they give up. Some students survive. Those who survive pretty quickly find out whether they're better in the theory or the experimental approach to physics. Clearly, if they're into experiments, they will drift towards atomic physics, optics, vacuum physics, and perhaps condensed matter physics, aside from several disciplines.

If they feel comfortable in advanced theory, they will lean towards theoretical physics, nuclear physics, and partly theoretical condensed matter physics. But all the statements so far were "politically correct" – implicitly assuming that everyone is equally good and he only has different interests. But that ain't the case.

Some people aren't too good at anything. In Prague, it's been a pretty much official wisdom that those folks are most likely to pick atmospheric physics or geophysics and – if they care about stars – astrophysics. In some sense, these fields are conceptually stuck in the 19th century or perhaps 18th century. They're simple enough. The laymen's common sense is often enough to do them. Quantum mechanics may be viewed as the most characteristic litmus test. If a student finds it impenetrable, he or she must give up ideas about going to particle physics but also condensed matter physics, optics, and some other characteristic "20th century" disciplines.

The people who are sort of good at maths but they really find out they are slower with quantum mechanics tend to go to (general) relativistic physics which is a powerful field in Prague. To some extent, the detachment from quantum mechanics is correlated with the detachment from statistical methods in physics and from experimenter's thinking. So among the smart enough folks, the relativists who have avoided quantum mechanics from their early years are probably the "least experimentally oriented" ones.

But meteorology is the ultimate refugee camp. A stereotypical idea of the job waiting for the meteorology alumni are the "tree frogs" [a Czech synonym for "weather girls"] who forecast the weather on TV screens. Their makeup is usually more important than their knowledge and understanding and yes, meteorology also has the highest percentage of female students (not counting teaching of maths, physics, and IT) which, whether you like it or not, is also correlated with the significantly lower mathematical IQ in that subfield.

I am totally confident that every sufficiently large and representative university with a physics department could reconstruct a correlation between e.g. the grades and the specialization that the students choose that would confirm most of the general patterns above. But at many places, these things are just a taboo. This is extremely unfortunate because the public should know where the smartest people may be looked for – and atmospheric physics or climatology certainly can't be listed in the answer to this question. It's important because when such things are taboo, certain people may play games and pretend that they're something that they're not.

Dyson also touched another interesting topic, the difference between "understanding" and "sitting in front of a computer model that is assumed to understand". I have discussed this difference many times. But let me repeat that they're totally different things. While I would agree it's a waste of time – and a silly sport – to force students to do mechanical operations that may be done by a computer or learn lots of simple rules that may be summarized in a book (because the students are downgraded to a dull memory chip or a simple CPU chip), it's necessary for a student to go through all the key steps and methods at least once so that he or she knows how they work inside. Or at least they may feel confident that if they wanted to improve their understanding what's going on, they could penetrate into all the details within hours of extra study or earlier.

The climate forecasts have so large error margins that it makes no sense to pretend that you need to make a high-precision calculation. But if a high-precision calculation isn't needed, an approximate calculation is enough. And for an approximate calculation, you shouldn't need a terribly complicated algorithm that runs for a very long time. In fact, you should be able to construct a simplified picture and a calculation that may be reconstructed pretty much without a computer. In particular, if you can't derive the order-of-magnitude estimate for a quantity describing the behavior of a physical system, then you just don't understand the behavior! A computer may spit out a magic answer but you are not the computer. If you don't know what the computer is exactly doing, assuming etc., then you can't independently "endorse" the results by the computer, you can't know what they depend upon and how robust the actual outcome of the program is.

Mechanical arithmetic calculations may be boring and of course that it's not what mathematicians and physicists are supposed to be best at or do most of their time (a physicist or a mathematician is something else than an idiot savant, a simple fact that most of my close relatives are completely unaware of). On the other hand, much of the logic behind sciences and behind individual arguments in science is about solid technical thinking that may be sped up with the help of a computer but this thinking is still completely essential because science and mathematics are ultimately composed of these things! If you don't know them, you don't know science and mathematics.

An extra topic in the context of atmospheric physics is the difference between the knowledge and "character of knowledge" of meteorologists and climatologists. I would say that meteorologists are in a much better shape when it comes to their "mental training" because they deal with lots of real-world data that affect their thinking. They have some experience. In comparison, climatologists work with a much smaller amount of data – they talk about 30-year averages but our record isn't too much longer than 30 years so there are just "several numbers". The predictions only face the real-world data after many decades when the climatologist is already retired or dead and this confrontation between real-world data and scientists' opinions is what drives the "natural selection" of ideas in empirically based disciplines – and atmospheric physics disciplines are surely textbook examples of them.

The most extreme form of the intellectual degradation is the idea that the only important quantity to be predicted is the rate of [global] temperature increase (or, almost equivalently in the climate believers' opinion, the climate sensitivity). If someone believes it's the only number to be derived and one can fudge hundreds of parameters to do so, it's too bad! Clearly, such a predictive framework is totally worthless. A predictive framework must be able to predict a larger number of numbers than the number of parameters that have to be inserted. Needless to say, my description of the situation of the "hardcore alarmed climatology" was way too kind because they don't even verify the single only "calculated" result – the climate sensitivity. When it differs by a factor of 3 from the observations, they just don't care. So they don't predict anything. They have absolutely no data – no "threats" for their pet ideas – that could direct their construction and improvement of the theories.

01 April 2013

29 March 2013

21 March 2013

Sasha Polyakov joins the Milner Prize winners

Sasha Polyakov joins the Milner Prize winners: Nine physicists won the inaugural $3 million Fundamental Physics Prize last summer and several winners were added in December.

When he was a bit younger. See his picture with a supplement to the prize. The ceremony at the Geneva International Conference Center took place yesterday and resembled the Oscars, too.

Yuri Milner wants to make everyone who matters rich. But given the finiteness of his wealth, he may be forced to switch to a lower pace at some point and only add one $3 million winner a year. So far, it seems to be the case in 2013. The new winner is spectacular, too: it's Alexander Markovich Polyakov (*1945 Moscow).

I officially learned about the new winner from a press release a minute ago but during that minute, I was already thinking what I would write about Polyakov and here is the result.

Polyakov's INSPIRE publication list shows 197 papers with 31,000 citations in total. That's truly respectable but in some sense, it may still understate his contributions to the field.

Up to the mid 1970s, he would work at the Landau Institute in Chernogolovka (Blackhead) near Moscow. You know, Lev Landau was a great physicist and the training that his institute gave to younger Soviet physicists was intense.

At that time, he would already work on two-dimensional field theory although the physical motivation often had to something with the ferromagnets, IR catastrophe caused by 2D Goldstone bosons, and other things. However, around 1975, he would move to the West: he started with Nordita that was founded by Niels Bohr in Stockholm in 1957 and continued with Trieste, Italy. It's my understanding that he has kept peaceful and productive relationships with the Soviet scientific institutions.

For decades, we've known him as a top physicist at Princeton University in New Jersey, of course. Ironically enough, I haven't spent any substantial time talking to him although I was a grad student just 15 miles away, at Rutgers. But I have talked a lot to virtually all of his recent collaborators and I did spend some time in his office. In the late 1990s, they turned it into my waiting room before a seminar. So I looked at the science books, papers, and some materials about the candidates for another prize he was evaluating as a member of the Nobel committee.

(If this information was secret, it should have been hidden more carefully! Just to be sure, I haven't touched any of those confidential papers and I did promise myself to be silent for 10 years about everything I could have seen without touching, too. This commitment wasn't breached.)

Polyakov's physics: AdS/CFT

More seriously, Sasha Polyakov is a giant because he is a string theory pioneer and because he has cracked many phenomena in gauge theories – and be sure, gauge theory and string theory are the two key theoretical frameworks of contemporary high-energy physics – especially when it comes to the phenomena that can't be analyzed by perturbative techniques (by simple Feynman diagrams that work at the weak coupling).

In general, these discoveries allowed the physicists to attack questions that could previously look inaccessible and many of these insights helped to reveal the logic that implies that gauge theory and string theory, at least in some "superselection sectors", are equivalent. These days, we understand many of these insights as aspects of the AdS/CFT correspondence.

The most cited paper (one with over 6,000 citations: this shows how much the holographic correspondence has been studied) he co-authored along with Steve Gubser and Igor Klebanov (GKP) is one about the correlators in the AdS/CFT correspondence. It came a few months after Maldacena's paper and Edward Witten published similar results independently at roughly the same time.

Maldacena's claim that string theory in the AdS space was equivalent to the maximally supersymmetric gauge theory or another theory on the boundary was true but it was missing some "teeth" needed to calculate "anything" we're used to calculate on both sides of the equivalence. The GKP results created a dictionary that allowed to translate the most typical observables – correlation functions – to the other description. Note that the paper talks about the "non-critical string theory" etc. even though it immediately says that this "non-critical string theory" is linked to or derived from the "ordinary" 10-dimensional critical string theory. This is clearly an idiosyncrasy added to the paper by Polyakov himself; the co-authors would surely avoid references to "non-critical string theory" if everything in the physics was fundamentally "critical string theory".

This Polyakov's handwriting is manifest in most of his other papers on string theory in the last 20 years. He had spent quite some time with "general strings" – such as the "flux tubes" in QCD – 20 years earlier and these "formative years" have affected his thinking. But you should have no doubts that these days, he understands that the dualities relate gauge theories to the 10-dimensional string theory today. He just got to that conclusion and other conclusions differently than many other physicists.

For years, Polyakov has been a visionary and he has outlined many visions. They often turned out to be rather accurately reproduced by the actual technical revolutions in the field. But some details of Polyakov's visions were slightly incorrect or insufficiently ambitious, we could say. It's perhaps inevitable for a great visionary that even these aspects of his visions that weren't quite confirmed (the importance of "non-critical string theories" in the holographic dualities is a major example) sometimes leave traces in his papers and talks.

BPZ and two-dimensional CFTs

The second most cited paper he co-authored (with over 4,000 citations) was written in the Landau Institute in 1983 (and published in 1984) along with Belavin and Zamolodchikov; yes, all the three authors were Sashas (I know Zamolodchikov very well, from Rutgers).

When you're learning string theory these days, you must master various methods to deal with the two-dimensional world sheet of the propagating (and joining and splitting) strings. The world sheet theory may look like "just another" quantum field theory, with its Klein-Gordon and other fields. Its spacetime (I mean the world sheet) has a low dimension but you could think that this difference is just a detail. Many beginners who want to learn the world sheet methods expect that they will do exactly the same things as they did in the introductory QFT courses but \(d^4 x\) will be replaced by \(d^2 x\) everywhere. ;-)

However, it's not the case. The theory on the world sheet is conformal. Its physics is scale-invariant (no preferred length scales or mass scales) and it is, in fact, invariant under all angle-preserving transformations, the so-called conformal transformations. When you look at these symmetries in the context of a \(d\)-dimensional space or spacetime, you will find out that they form the group \(SO(d+1,1)\) or \(SO(d,2)\), respectively. (The increased argument in \(SO(x)\) is an early sign of holography.)

This cute music video shows the "ordinary" conformal transformations of the two-dimensional plane that form the group \(SO(3,1)\sim SL(2,\CC)\), the so-called Möbius transformations. They're the only one-to-one conformal transformations of the plane (including one point at infinity) onto itself.

In two dimensions and only in two dimensions (and this is the uniqueness that makes strings special among the would-be fundamental extended objects), the conformal group is actually greater and infinite-dimensional as long as you allow transformations that can't be extended to one-to-one transformations of the whole plane onto itself (if you allow the plane to be wrapped, the maps to be non-simple, and so on). You should remember from your exposure to the complex calculus that any holomorphic function \[

z\mapsto f(z)

\] preserves the angles. This fact may also be expressed by the Riemann-Cauchy equations and has been extremely helpful in solving various two-dimensional problems, e.g. differential equations with complicated boundary conditions in fluid dynamics in 2 dimensions. In string theory, the conformal symmetry allows us to show the equivalence of the plane and the sphere, the half-plane and the disk, the annulus and the cylinder, and the infinitely long tube added to a Riemann surface to a local insertion of the (vertex) operator, among other things. The symmetry is also the reason why the loop diagrams in string theory remain finite-dimensional despite the infinite number of shapes of Riemann surfaces.

When the two-dimensional quantum field theory is conformal, one discovers lots of new laws, identities, and methods to calculate that don't exist for generic quantum field theories and BPZ discovered many of them. Because the theory is invariant under an infinite-dimensional conformal group, or at least its Lie algebra (the Virasoro algebra), one may organize the states into representations of this algebra. Much like in the Wigner-Eckart theorem (where the rotational group plays the role we expect from the Virasoro algebra here), the correlation functions of the operators (which are mapped to states in a one-to-one way) may also be expressed using a smaller number of functions summarized by the so-called "conformal blocks". These conformal blocks are associated with individual representations of the Virasoro algebra and their products' sums yield the correlation functions.